|

Hi, I am a M.S. in Robotics student at Carnegie Mellon University, advised by Prof. Shubham Tulsiani. Previously, I was a research intern in the Visual Computing lab at Seoul National University, advised by Prof. Hanbyul Joo. I completed B.S. (Summa Cum Laude) in Mechanical Engineering and Mathematics at Seoul National University. |

|

|

My research goal is to enable robots to perform complex manipulation tasks: those involving long-horizon planning, contact-rich interactions, and high-precision dexterous control. * denotes equal contribution. |

|

Yanbo Xu*, Yu Wu*, Sungjae Park, Zhizhuo Zhou, Shubham Tulsiani. Preprint, 2025 Paper / Website / Code |

|

Sungjae Park, Homanga Bharadhwaj, Shubham Tulsiani. ICRA, 2026 Paper / Website / Code |

|

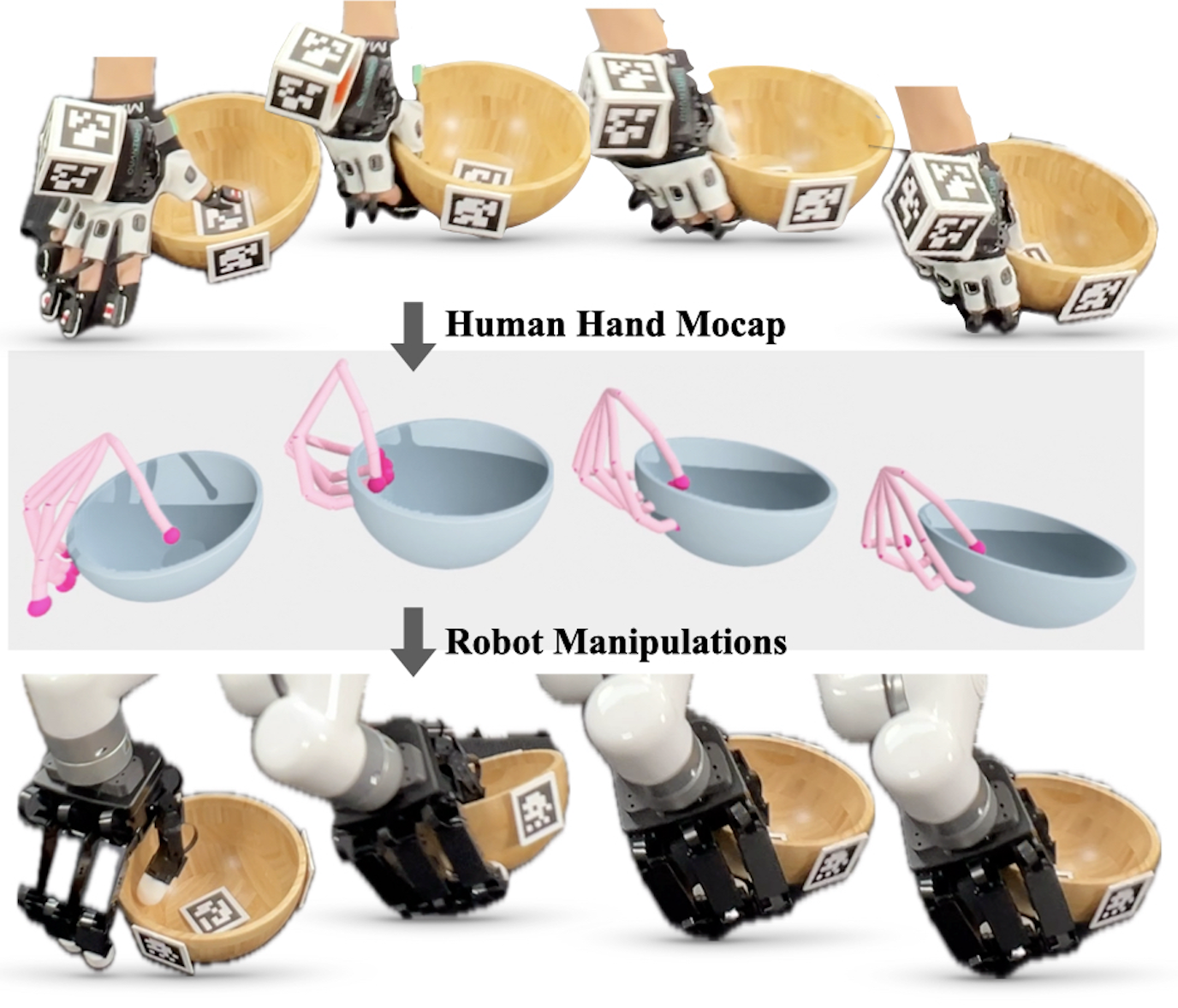

Sungjae Park*, Seungho Lee*, Mingi Choi*, Jiye Lee, Jeonghwan Kim, Jisoo Kim, Hanbyul Joo. CoRL X-Embodiment Workshop, 2024 Paper / Website |

|

DROID Dataset Team RSS, 2024 Paper / Website / Code |

|

Open X-Embodiment Collaboration ICRA, 2024 (Best Conference Paper Award) Paper / Website |

|

|

|

Student Instructor, Spring 2022 Undergraduate Tutoring, Spring 2018, Fall 2018, Spring 2021, Fall 2021 Undergraduate Tutoring, Spring 2021 |

|

Reviewer for NeurIPS, ICLR, ICRA, RA-L, CoRL. |

|

|